Stop guessing: 5 AI architecture patterns that actually prevent project failure

Battle-tested architectural solutions for AI systems that last beyond the prototype stage

You know the feeling, right? That initial rush when AI generates code in seconds. Pure magic. Until it isn’t. Until you’re weeks deep, staring at a tangled mess that nobody understands, least of all the AI that wrote it. That prototype that looked so promising? Now it’s a ticking time bomb of technical debt.

This isn’t just bad luck. It’s the predictable outcome of guessing your way through AI development. Treating AI systems like traditional software is a recipe for disaster. Why? Because AI introduces unique challenges: non-determinism, context decay, data drift… the list goes on. Ignoring these realities leads straight to stalled projects and wasted resources.

But what if you could stop guessing? What if there were proven blueprints – architectural patterns – specifically designed for the chaos of AI development? Patterns that address the philosophical foundation of AI-First development and ensure your projects don’t become unsustainable in the long run?

Good news: there are. And they’re not complex academic theories. They are battle-tested structures that successful teams use to build AI systems that last. Systems that are maintainable, scalable, and actually deliver on the promise of AI without the crippling hangover.

Forget “vibe coding.” It’s time to build with intention. It’s time to adopt a structured approach to development. These patterns are your starting point to transform the way you approach AI development and prevent the context crisis that’s silently undermining productivity.

[According to Gartner (July 2024) (https://www.computerworld.com/article/3478532/nearly-one-in-three-genai-projects-will-be-scrapped.html), “At least 30% of generative AI (GenAI) projects will be abandoned after proof of concept by the end of 2025, due to poor data quality, inadequate risk controls, escalating costs or unclear business value.” This stark prediction highlights the critical need for structured approaches.]

Let’s dive into the 5 architecture patterns that separate the AI success stories from the cautionary tales.

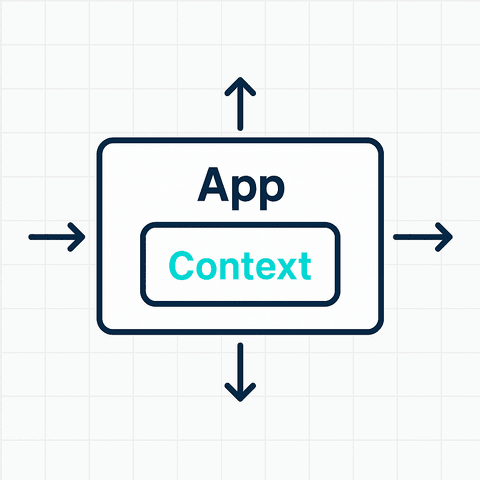

Pattern 1: The context-aware monolith – start smart, not complex

The core problem: Your AI needs context. Without it, interactions become disjointed, repetitive, and frankly, dumb. As explained by experts in AI usability and testing (like Frank Spillers or the folks at testRigor), understanding the ‘who, what, where, when, why’ is crucial for AI to provide genuinely useful responses rather than generic guesses. But when you’re building a simpler application – maybe a chatbot MVP or a focused content generator – jumping straight into complex microservice architectures for context management feels like overkill. How do you embed essential context awareness from day one without over-engineering?

The pattern – integrate context internally: The Context-Aware Monolith tackles this head-on. Instead of building a separate, complex pipeline for context, you integrate context management directly within the main application logic. Think of it as giving your monolith a dedicated ‘memory’. The application itself becomes responsible for capturing, storing (perhaps in a dedicated internal module, class, or specific database tables), and retrieving the necessary context (like user history, session data, previous prompts/outputs) needed for each AI interaction. This aligns with the fundamental need for AI systems to have memory or “cognition” to be effective.

Why start here?

- Keep it simple: It’s far easier to implement and manage for smaller projects or initial versions (MVPs). Less moving parts means faster development initially.

- Lower latency (at first): Context is immediately available within the application’s process, potentially reducing the overhead of external service calls.

- Unified logic: Development, core features, and context management live together, simplifying the initial codebase and debugging.

Warning: This pattern is best suited for applications with a relatively limited scope and scale. As complexity grows, the tight coupling between application logic and context management can become a bottleneck. Be prepared to evolve to more decoupled patterns (like the Context Pipeline below) as your needs expand.

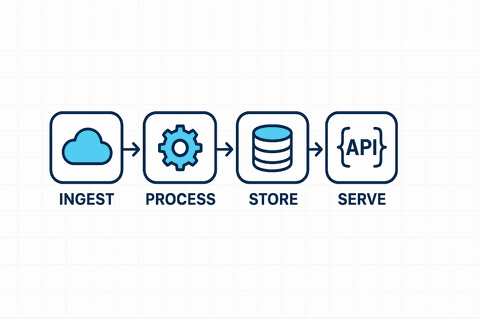

Pattern 2: The decoupled context pipeline

The problem it solves: Your AI system needs to handle complex context from multiple sources (user input, databases, external APIs), process it, enrich it, and make it consistently available to various AI models or agents. The context-aware monolith is starting to creak.

How it works: You build a dedicated, separate service or pipeline whose sole job is context management. This pipeline ingests raw context, processes it (e.g., embedding generation, summarization, entity extraction), stores it effectively (vector databases are common here), and serves it up to the AI models when needed. This is where a Living Context Framework (LCF) truly shines, helping teams achieve sustainable productivity gains.

Benefits:

- Scalability: Context processing can scale independently of the main application.

- Modularity: Easier to update or swap out context processing techniques.

- Reusability: The processed context can potentially serve multiple AI models or applications.

Implementation notes: Introduces more architectural complexity and potential latency compared to the monolith. Requires careful design of the pipeline stages and context storage.

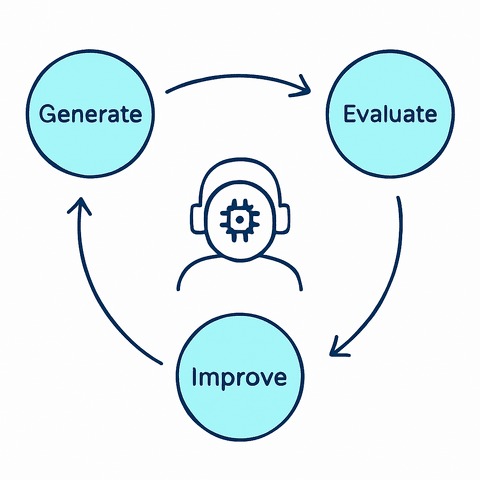

Pattern 3: The agentic feedback loop

The problem it solves: Your AI system needs to learn and adapt over time based on its own outputs or explicit user feedback. How do you build a system that isn’t static but continuously improves its performance or corrects its mistakes?

How it works: This pattern designs the system so that the AI’s output (or feedback on that output) is fed back into the system to modify future behavior. This could involve:

- Storing successful prompt/output pairs for few-shot learning.

- Using user ratings to fine-tune an underlying model.

- Having an AI agent analyze its own errors to generate corrective prompts.

Benefits:

- Self-improvement: The system can potentially get better over time without constant manual intervention.

- Adaptability: Can adjust to changing data patterns or user preferences.

- Resilience: May learn to recover from certain types of errors.

Implementation notes: Requires careful design to avoid unintended feedback loops or biases. Monitoring is crucial. Can be complex to implement and debug.

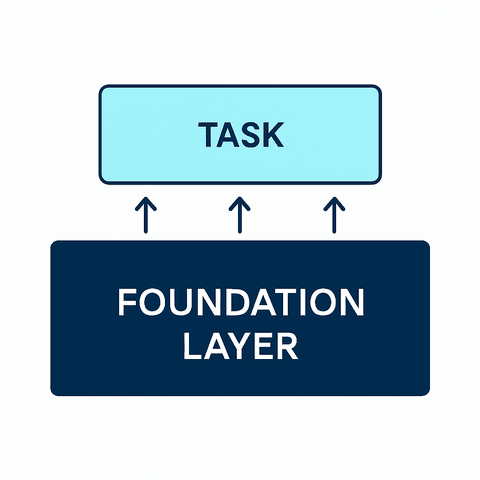

Pattern 4: Stratified AI systems (foundation + application layers)

The problem it solves: You want to leverage powerful, general-purpose foundation models (like GPT-4, Claude 3) but need to apply them to very specific tasks or domains without constantly fine-tuning the massive base model. How do you layer specialized intelligence on top of general capabilities?

How it works: You create distinct architectural layers.

- Foundation layer: Houses the large, general-purpose AI model(s). Handles core language understanding, generation, or other broad capabilities.

- Application/task layer: Contains smaller, specialized models, prompt templates, business logic, and context specific to your application. This layer orchestrates calls to the foundation layer, adding the necessary task-specific context and interpreting the results. This implements the intent-driven architecture discussed in the AI-First Development Framework Guide.

Benefits:

- Reusability: Leverage powerful foundation models across multiple applications.

- Faster development: Focus application development on the specific task layer.

- Easier updates: Update foundation models with potentially less impact on application logic (though prompt engineering might need adjustments).

Implementation notes: Requires clear API design between layers. Managing prompts and context injection at the application layer becomes critical.

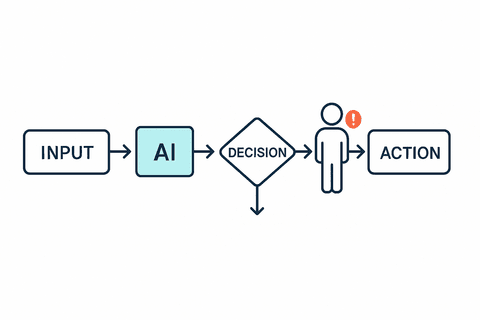

Pattern 5: Human-in-the-loop orchestration

The problem it solves: Your AI system operates in a high-stakes domain (e.g., medical, financial) where errors are unacceptable, or it encounters situations with high ambiguity where AI alone cannot make a reliable decision. How do you blend AI automation with necessary human judgment?

How it works: You explicitly design points in the workflow where human intervention is required or requested. This could be:

- AI flags low-confidence predictions for human review.

- A human must approve critical actions proposed by the AI.

- Users provide feedback that directly corrects or guides the AI’s next steps within the process.

- The system routes ambiguous cases to a human expert queue.

Benefits:

- Safety & reliability: Reduces the risk of critical errors in sensitive domains.

- Trust: Increases user and stakeholder trust in the system.

- Handling ambiguity: Leverages human judgment for situations AI struggles with.

- Data generation: Human interactions can generate valuable data for future AI training.

Implementation notes: Requires designing efficient user interfaces for human interaction. Need to manage potential bottlenecks caused by human review times. Define clear criteria for when human intervention is triggered. This pattern aligns with the ethical considerations emphasized in AI-First development.

Stop guessing, start building intentionally

Building with AI doesn’t have to feel like navigating a minefield blindfolded. These five patterns provide proven structures to tackle the inherent challenges of AI development head-on. They transform uncertainty into intentional design.

Choosing the right pattern (or combination of patterns) depends on your specific project’s scale, complexity, and requirements. But the core principle remains: structure prevents failure. For effective implementation, these patterns should be secured using proper tools like those described in Securing AI Code with Snyk.

Stop leaving the success of your AI projects to chance. Explore these patterns, understand their trade-offs, and start building AI systems that are not just powerful today, but sustainable tomorrow. A solid architectural foundation is key to avoiding the pitfalls of AI development.

Ready to go deeper? These patterns are just one part of the comprehensive AI-First framework designed to guide your entire development lifecycle. Discover how The PAELLADOC Revolution is changing how teams approach AI development.