The AI-First Development Framework Guide

the silent chaos of AI without a method

Your team’s using AI to write code, right? Sounds great on paper. Faster. More “productive.” But let’s be honest: something feels off.

You find yourself staring at code lines that worked fine three months ago, but now nobody dares touch them. AI-generated code, sure, but without a shred of context about why it’s there or how it fits into the bigger picture. Trying to understand it is like deciphering hieroglyphics. Hours, even days, wasted. Frustration mounts.

That supposed AI productivity vanishes. Worse, it becomes a dead weight. AI projects that promised innovation stall out, burning budget on hidden maintenance and refactoring costs. Good talent, the kind that can’t stand working blind, starts looking for the exit. Meanwhile, others are figuring out how to make it work.

For a deeper dive into the underlying philosophical context at the heart of this problem, see The Hidden Truth About AI-First Development.

Do you recognize this downward spiral in your own team?

Learn more about how PAELLADOC tackles this with context-first principles in The PaellaDoc Revolution: AI-First Development.

The story no one tells you (but you’ve probably lived it)

Picture this: Team Alpha eagerly adopts AI assistants. At first, it feels like magic. Code flies. Story points soar. Management is thrilled.

Six months later.

Maria, a senior developer, needs to modify a critical feature built with AI help. She opens the code. It works, yes. But she has zero idea why a specific design choice was made. The associated documentation is generic, or worse, missing. The colleague who “supervised” the AI is no longer with the company. What should be a two-day fix turns into a two-week nightmare of reverse engineering, blind testing, and praying she doesn’t break anything. That initial “speed” came at a steep price… paid with frustration interest.

Now, picture Team Beta. They use AI too, but from day one, they adopted an AI-First framework. When Carlos, a new developer, needs to update a similar module, he finds the code, yes, but also the living context attached: the key design decisions, the requirements that drove it, the tests validating it, all anchored directly to the logic. Instead of fear, he feels confident. He makes the change in a morning, fully understanding the impact. Team Beta isn’t just fast; they’re sustainably fast.

The difference isn’t the AI they use. It’s the method. Team Alpha applied patches. Team Beta built on a solid foundation.

The harsh reality: the (verified) numbers don’t lie

Maria’s story isn’t unique. It’s the silent norm in too many teams adopting AI without a strategy. The numbers paint a worrying picture:

-

The time black hole: Developers already lose huge amounts of time just trying to understand code. The most recent Stack Overflow Developer Survey (from 2024, as the 2025 survey is not yet published) revealed that 61% of developers spend more than 30 minutes per day just searching for answers or solutions. (Source: Stack Overflow 2024 Developer Survey). Imagine how much worse this gets with machine-generated code lacking proper context.

-

Exponential technical debt: Code without clear context is the perfect recipe for runaway technical debt. Studies consistently show that the costs associated with poor software quality and technical debt are staggeringly high, impacting budgets through increased maintenance, bug fixing, and operational failures. For instance, SIG’s 2025 Finance Signals report highlights multi-million euro annual costs per system due to poor maintainability, and cites Gartner estimates that up to 70% of IT budgets go to ‘keeping the lights on’ (Source: SIG - How poor maintainability drains 2025 IT budgets in finance). AI, used without control, significantly amplifies this problem.

-

The talent drain: Good developers hate working blind. Lack of context and constant frustration are direct causes of turnover. Replacing an experienced developer in a technical role can cost around 80% of their annual salary, according to recent Gallup research (Source: Gallup, July/Sept 2024). How much is that initial AI “productivity” really costing you?

-

AI projects that never launch: Statistics vary, but a significant number of corporate AI initiatives never reach full production or fail to deliver expected value, often due to integration, maintenance, and scalability problems. Learn why many initiatives stall in Your AI Projects Are Unsustainable - Here’s Why.

For a deeper dive into the hidden costs of unstructured AI adoption, see The dangerous illusion of AI productivity (and how to achieve real gains).

The revelation: it’s not magic, it’s method (and it’s urgent)

So, what’s the way out of this cycle of frustration and hidden costs? It’s not abandoning AI. It’s not working harder patching old systems.

The solution is a fundamental shift in how we build software from the ground up. It’s moving from merely using AI tools to adopting an AI-First approach.

Introducing the AI-First Development Framework.

This isn’t just another tool to add to your stack. It’s a paradigm shift. A complete methodology that integrates AI and, crucially, context management (the famous ‘why’ behind the code) into every phase of the development lifecycle.

While others keep trying to force AI’s power into old molds, creating accidental complexity and technical debt, teams adopting an AI-First framework build differently. They build on a foundation designed for AI, where code and its context evolve together.

The difference is clear: Some fight the current, others navigate with it. This framework isn’t an incremental improvement; it’s the difference between survival and leadership in the age of AI-assisted development.

Paradigm contrast: traditional vs AI-First development

| Paradigm | Primary focus | Knowledge treatment | Decision approach | Primary output |

|---|---|---|---|---|

| Traditional | Functionality | Static documentation | Pre-development | Code correctness |

| AI-First | Context preservation | Living, evolving knowledge | Intent-driven | Context + code |

Note: AI-First development treats knowledge as the primary artifact, making context–the why–the foundation for every code change.

Tip: Embedding context from day one prevents the confusion and technical debt common in legacy systems.

Breaking down the framework: the pillars of success

Foundational principles: the unshakeable bedrock of the framework

For AI-First Development to be more than just a buzzword, but a sturdy methodology, it rests on a set of philosophical principles that change everything. These aren’t just guidelines; they are the framework’s DNA.

These principles redefine how we value context, design architecture, and conceive human-AI collaboration. Instead of seeing code as the sole king, they enthrone contextual knowledge and purpose (intent) as central elements.

We’ve explored these 5 philosophical principles in detail in our foundational article:

➡️ Essential reading: The Hidden Truth About AI-First Development

Understanding these principles – from treating Context as Primary Creation to adopting a Contextual Decision Architecture – is the indispensable first step to successfully implementing the AI-First framework. That article digs deep into the why behind each one, backed by data and analysis.

For the purpose of this general guide to the framework, the key takeaway is that these principles form the foundation upon which the architectures, patterns, and tools we’ll discuss next are built. Without them, any AI-First implementation will lack the soundness needed to be sustainable.

AI-First architecture: designed for the future (not the past)

If the principles are the framework’s soul, the architecture is its skeleton. Trying to run an AI-First strategy on a traditional architecture is like putting a Formula 1 engine in a horse-drawn carriage – it just doesn’t work. Monolithic architectures or even classic microservices, designed primarily around functionality, choke on the complexity, data management needs, and the critical requirement for context that AI demands.

AI-First architecture changes the game.

It’s not just about where you put your containers. It’s about designing the flow of information and, crucially, context, from the very beginning. Think of systems where the ‘why’ travels alongside the ‘what’ and the ‘how’.

Some key concepts defining this architecture include:

Context-aware services:

- What they are: Components (think microservices, but smarter) that not only perform a task but understand and operate based on the context of the decision or the data they process.

- Key benefit: Eliminates the “black boxes”; every part of the system knows why it’s doing what it’s doing, making debugging and evolution easier.

Intent-driven design:

- What it is: The system’s structure is organized around business goals or user intentions, not just technical functions. Purpose guides the form.

- Key benefit: Directly aligns technology with business value, making it easier to measure impact and prioritize changes.

Data/context mesh:

- What it is: Decentralizing the ownership and access to data and its associated context, treating them as a product. Specific teams own their data and context domains.

- Key benefit: Breaks down information and context silos, allowing different parts of the system (and different AI models) to reliably and scalably access and understand relevant information.

This way of building systems doesn’t just support, but enables knowledge preservation. It greatly eases the implementation of solutions like the Living Context Framework (LCF), ensuring context isn’t an afterthought but an integral part of the system’s fabric.

An AI-First architecture isn’t just more technically scalable or resilient; it’s fundamentally more intelligible and adaptable, ready for a future where AI isn’t the exception, but the norm. To see how these architectural principles manifest in practice, explore our guide to 5 AI architecture patterns that actually prevent project failure.

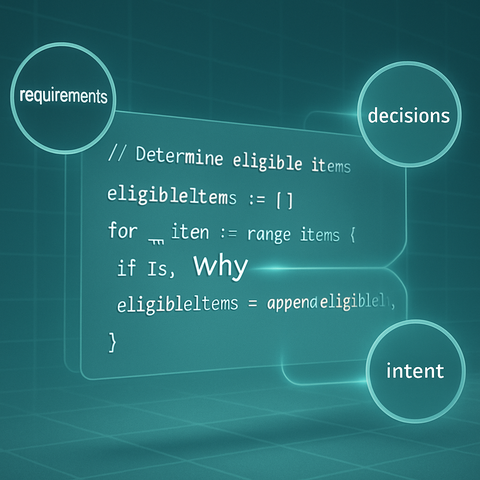

AI-native design patterns: code that (almost) explains itself

A good architecture needs good building blocks. Design patterns are those tested, reusable solutions we apply when writing code. But classic patterns (GoF, etc.), while valuable, weren’t designed for a world where much of the code might be machine-generated and where context is as vital as logic.

We need AI-native design patterns. These don’t replace the old ones but complement them, focusing on clarity, traceability, and context preservation in a hybrid human-AI environment.

Some key examples your team should start using now:

Context injection:

- What it is: A pattern where relevant context (the ‘why’, requirements, design decisions) is programmatically “injected” or directly associated with specific code blocks, often using structured metadata or tools like LCF.

- Key benefit: Makes visible the invisible; the code’s purpose is right there, not lost in a document or someone’s head. Drastically cuts down understanding time.

Explainable AI by design (XAIbD):

- What it is: Designing code and models in a way that inherently makes explaining their decisions or outputs easier. It involves choosing more interpretable model architectures or including explanation mechanisms from the start.

- Key benefit: Turns black boxes into glass boxes. Allows you to trust, debug, and improve AI components informedly, not blindly.

Self-contextualizing code:

- What it is: Writing code that, through clear naming conventions, modular structures, and the use of living context tools (LCF), minimizes the need for external documentation to understand its immediate purpose. The code and its associated context tell the story together.

- Key benefit: Reduces reliance on external documentation that’s almost always out of date. Speeds up code review and future maintenance.

Context-driven testing:

- What it is: Designing test cases that not only validate functionality (the ‘what’) but also ensure the code behaves correctly according to the context and intent it was created for (the ‘why’).

- Key benefit: Ensures code doesn’t just “work,” but “works for the right reason,” catching subtle logic errors that traditional functional tests might miss.

Think of these patterns as the “director’s notes” embedded directly into your code’s musical score. Anyone can pick it up and understand not just the notes, but the intention behind the music.

Adopting these patterns takes discipline, yes. But the payoff is huge: code that’s easier to understand, maintain, and evolve, even when AI is a primary contributor. It’s the difference between code that merely works and code that lasts.

Ethical considerations: the indispensable moral compass of AI-First development

Let’s be blunt: AI isn’t neutral, and ignoring ethics isn’t just irresponsible—it’s a ticking time bomb for your business. Models trained on biased data perpetuate and amplify societal prejudices. Automated decisions, opaque and unchecked, can lead to discriminatory outcomes, legal nightmares, and a catastrophic loss of user trust. Think loan applications unfairly denied, hiring algorithms filtering out qualified candidates, or medical diagnoses missing the mark for certain demographics. This isn’t science fiction; it’s the costly reality of deploying AI without an ethical compass.

The AI-First framework tackles this head-on by integrating ethics by design, making it inseparable from the development process itself, not a checkbox ticked at the end. Here’s how its principles translate into practice:

Radical transparency (where possible):

- The problem: AI models often act as “black boxes,” making it impossible to understand why they reach a certain conclusion.

- The AI-First solution: Leverage the Living Context Framework (LCF) to meticulously document data sources, model versions, training parameters, and known limitations alongside the code. This isn’t just documentation; it’s an auditable trail. Implement Explainable AI (XAI) techniques from the start (as discussed in patterns), choosing interpretable models when feasible and integrating tools that provide decision reasoning.

- Why it’s vital: Builds crucial trust with users, regulators, and your own team. Enables effective debugging and identification of flaws before they cause public harm or PR disasters. Demonstrates real commitment, not just lip service.

Active bias mitigation:

- The problem: Assuming data is neutral is naive. Historical data reflects historical biases, which AI will gleefully learn and scale.

- The AI-First solution: Implement a proactive bias detection and mitigation workflow. This includes: rigorously auditing datasets for representational biases before training; using fairness metrics (e.g., demographic parity, equalized odds) during model evaluation; employing techniques like adversarial debiasing or re-weighting; and testing specifically against known bias vectors relevant to your domain. Link these tests and results back to the code’s context in the LCF.

- Why it’s vital: Prevents discriminatory outcomes that harm users and attract lawsuits. Ensures your product serves your entire potential market fairly. Protects your brand from the reputational damage of biased AI.

Privacy by design:

- The problem: AI models, especially LLMs, can inadvertently memorize and expose sensitive training data (PII). Rushing features often leads to privacy breaches discovered after deployment.

- The AI-First solution: Integrate privacy from the architecture level. Use context-aware services designed to handle PII appropriately. Apply data minimization principles aggressively – only collect and use the data strictly necessary. Implement robust anonymization, pseudonymization, or differential privacy techniques during data preparation, not as an afterthought. Document these privacy controls within the LCF for traceability.

- Why it’s vital: Ensures compliance with GDPR, CCPA, and evolving regulations, avoiding massive fines. Protects your users’ fundamental rights. Builds a reputation as a trustworthy custodian of data – a massive competitive advantage.

Clear governance and auditing:

- The problem: Without clear ownership and processes, ethical considerations get lost in the shuffle of development sprints. Who is responsible when an AI makes a harmful decision?

- The AI-First solution: Establish an AI Ethics Review Board or designated ethics champions within development teams. Define clear policies for AI use, data handling, and model deployment. Implement automated audit trails leveraging the LCF, logging key decisions, model performance metrics, and ethical reviews. Conduct regular internal and potentially external audits of AI systems’ impact.

- Why it’s vital: Guarantees accountability and clear lines of responsibility. Provides a framework for adapting to new ethical challenges and regulations. Proves to stakeholders (investors, customers, regulators) that you are managing AI risks proactively and responsibly.

Ethical Decision Checklist:

- Identify potential impact: Who could be negatively affected?

- Check for bias: Have we audited data & model? Are fairness metrics met?

- Verify privacy: Is data minimized? Are protections adequate? Is consent clear?

- Ensure transparency: Can we explain the decision? Is it documented in LCF?

- Review & Approve: Has it passed ethical review/governance checks?

Integrating ethics doesn’t slow innovation; it’s the guardrail ensuring your AI journey leads to sustainable, valuable, and human-centric outcomes. An AI-First system that ignores ethics is fundamentally flawed and destined to fail. Building ethically isn’t just the right thing to do; it’s the only smart way to build for the long term.

For practical security best practices with AI-generated code, check out Securing AI code with Snyk: A practical guide.

Tools and technologies: the gears that turn the framework (with LCF as the centerpiece)

A powerful framework needs the right tools to make it a daily reality. Good intentions aren’t enough; you need the right technological support. The AI-First ecosystem relies on several categories of tools, but one acts as the contextual glue holding everything else together:

Living context framework (LCF) - the keystone:

- What it is: We’ve discussed the critical importance of context. LCF (like the one PAELLADOC implements) is the technology that makes that principle real. It’s a system designed to capture, manage, and link the ‘why’ (requirements, decisions, discussions) directly to the ‘what’ (the code) dynamically and versioned. It’s not static documentation; it’s living knowledge.

- Key benefits:

- Anchors lost context directly to the code, eliminating ambiguity at the source.

- Transforms documentation from an outdated burden into a strategic asset that evolves with the software.

- Unlocks true collaboration and understanding in AI-using teams, drastically cutting time wasted deciphering code.

MLOps platforms (machine learning operations):

- What they are: Tools that automate and manage the entire lifecycle of machine learning models (training, deployment, monitoring, versioning).

- Why they’re key here: A solid MLOps platform, ideally integrated with or aware of the LCF, ensures context is also maintained throughout the specific lifecycle of AI models.

Context-aware dev tools:

- What they are: IDEs, linters, code review tools that can leverage LCF information to offer deeper insights, smarter validations, and more relevant suggestions to the developer.

- Why they’re key here: They make working with living context smooth and natural within the developer’s daily workflow.

Context-driven testing tools:

- What they are: Testing frameworks that allow defining and running tests validating not just the function, but the intent captured in the LCF (as we saw in the patterns).

- Why they’re key here: They guarantee the software does the right thing for the right reason.

The right technology isn’t the AI-First framework, but it’s absolutely necessary to implement it effectively and scalably. And at the heart of that technology, the ability to manage context as a living asset – the LCF – is what makes the fundamental difference.

Conclusion: time to choose: AI-driven chaos or AI-First leadership

We’ve laid out the full picture. On one hand, the easy (and dangerous) path: keep using AI as just a fast code generator, creating systems that work today but become incomprehensible tomorrow. It’s a path leading to frustration, waste, and falling behind.

On the other hand, there’s the AI-First way. A fundamental shift in focus that puts context and intent at the center of development. A framework built on solid principles, enabled by smart architecture and native design patterns, and powered by tools like the Living Context Framework (LCF).

This isn’t a theoretical path. It’s the practical, proven way to:

- Build AI-powered software that’s truly maintainable and scalable. Forget “black box” code.

- Accelerate your team sustainably. Not just initial sprints, but consistent long-term speed.

- Attract and keep top talent. Good developers want clarity and purpose, not chaos.

- Position your business to lead, not just survive, in the AI era.

The question is no longer if AI will change software development. The question is how you will adapt.

Will you keep fighting accidental complexity, or will you start building on a solid foundation designed for the future?